The Supercomputing conference this year was in Austin, TX. I was down there for a reconfigurable computing workshop on Monday and spent a day and a half in the exhibit hall.

The workshop on Monday had 100 or so attendees, with a mix of academic papers and FPGA industry presentations and panels. The key takeaway for me was that reconfigurable computing for HPC applications (scientific computing, bioinformatics, finance, etc) is now well-proven but there are still issues with platform and tool maturity that we all need to work out before RC is mainstream. GPU-based acceleration has gained traction fast because GPUs are viewed as safe and widely available. Everyone has a graphics card of some sort, so using one to accelerate algorithms does not seem exotic. That’s not yet the case with FPGAs.

Steve Wallach presented the Convey technology in a Monday morning session. Wallach also won the Seymour Cray award this year. For those who don’t know his history, Wallach was a character in Tracy Kidder’s “Soul of a New Machine” as well as a key innovator at Convex and HP. I like the Convey approach because it emphasizes the use of commodity processors, commodity FPGAs, and well-understood C-language and Fortran programming flows. The FPGAs in the system are used to implement accelerator “personalities” that are described by Convey as follows:

“Personalities are extensions to the x86 instruction set that are implemented in hardware and optimize performance of specific portions of an application. For example, a personality designed for seismic processing may implement 32-bit complex arithmetic instructions — and at performance levels well beyond that of a commodity processor.”

The goal appears to be a library-based approach, in which developers do not directly program the FPGAs. Convey indicates that a Personality Development Kit will be available, presumably for more advanced users or system integrators.

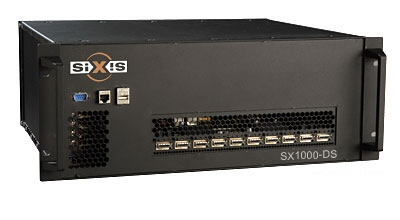

siXis Technology was also presented during the workshop. Their platform is called the SX1000 and is a stacked FPGA module architecture with a toroidal interconnect. Very dense, with 16 or more high-end FPGAs in a small cube. Very cool. Or very hot, as the case may be. If there is a secret sauce to the siXis approach, it must be in the sauce they use to cool all those FPGAs stacked so close together.

NVIDIA had a larger presence this year than last. The TESLA platform is getting a lot of attention. But I also overheard more than a few comments regarding Intel Larrabee being a possible NVIDIA killer. And AMD is making a lot of noise (including large advertisements in WSJ) about Fusion. 2009 could see a knock-down fight between Intel, AMD and NVIDIA for acceleration business. I’m not making any predictions here.

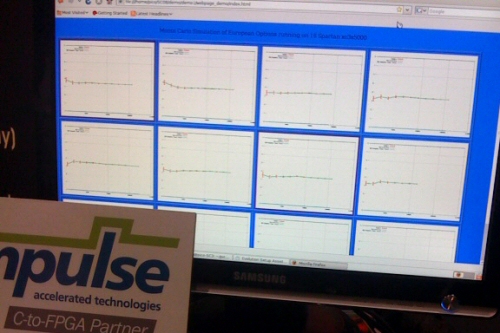

The Pico Computing booth featured two Impulse demos including an edge detection video demo, and our 16-FPGA options valuation demonstration. The options demo looked very nice, with 16 graphs generated by the 16 FPGAs, each of which was running an accelerated Monte Carlo simulation. Check it out: